Section: New Results

Fundamentals of Interaction

Participants : Sarah Fdili Alaoui, Michel Beaudouin-Lafon, Cédric Fleury, Wendy Mackay, Theophanis Tsandilas.

In order to better understand fundamental aspects of interaction, ExSitu studies interaction in extreme situations. We conduct indepth observational studies and controlled experiments which contribute to theories and frameworks that unify our findings and help us generate new, advanced interaction techniques. Although we continue to explore the theory of Instrumental Interaction in the context of multi-surface environments [23] , we are also extending it into a wider framework we call information substrates. This has resulted in several prototypes, such as Webstrates [18] . We also continue to study elementary interaction tasks in large-scale environments, such as pointing [11] and object manipulation [15] .

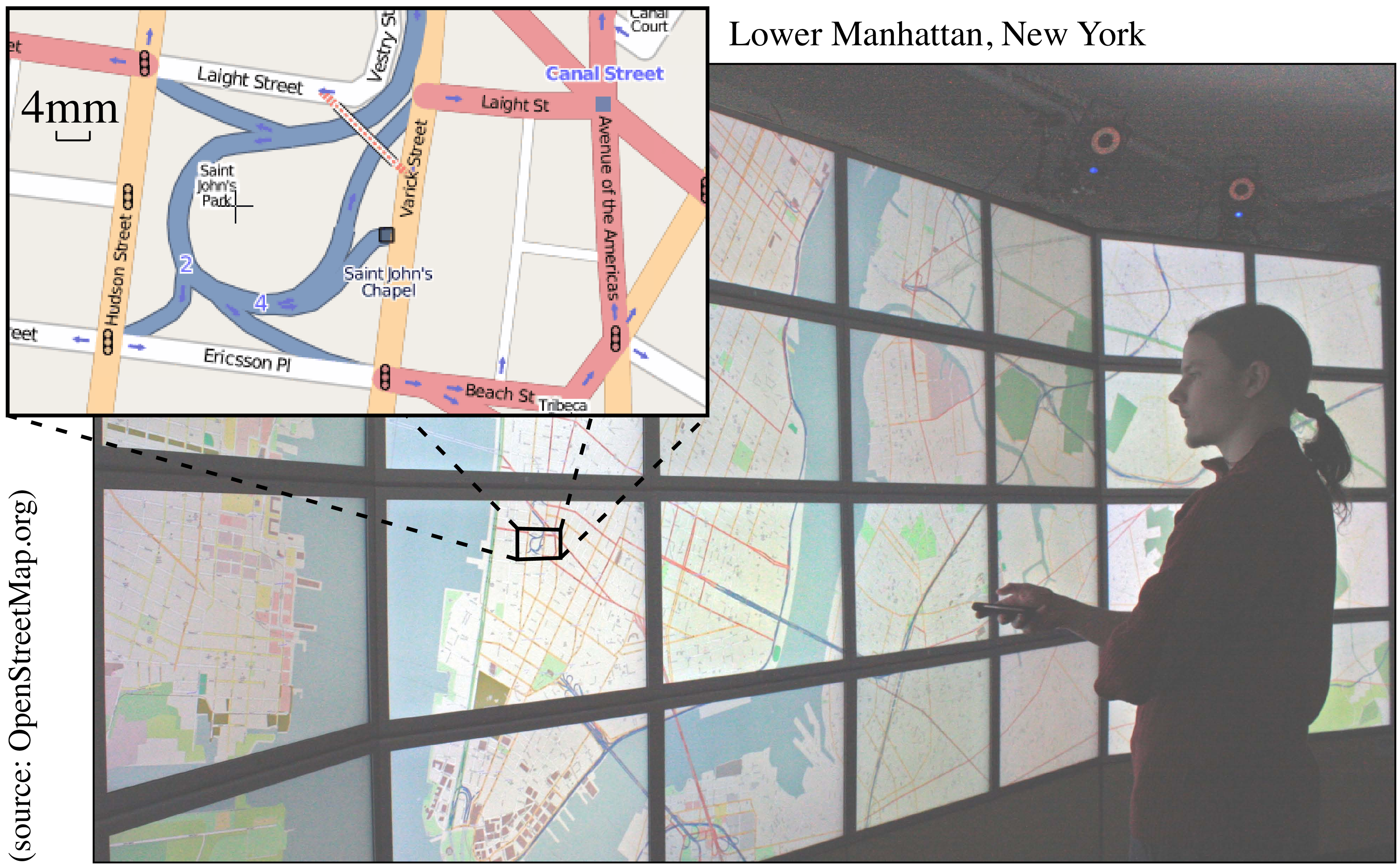

Information substrates – “Instrumental interaction” argues that, since our interaction with the physical world is often mediated by tools, or instruments, we should do the same in the digital world. Our work on multisurface environments has demonstrated the value of this model, for example, to support distributed interfaces in which the user controls the content of a wall-sized display using handheld devices [23] . Instrumental interaction does not, however, describe the “objects of interest” that instruments interact with, nor does it explain how an object becomes an instrument, nor how users appropriate them in unexpected ways (the principle of “co-adaptation”).

“Information substrates” embrace a wider scope than instrumental interaction: both objects and instruments are “substrates” that hold information and behavior, and can be combined in arbitrary ways. What makes an object an instrument is defined not by what it is but by how the user uses it. We started to explore this concept wiyh Webstrates [23] , a web-based environment in which content and tools are embedded in the same information substrate—in this case the Document Object Model (DOM) (Figure 7 ).

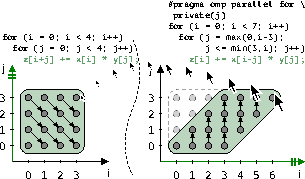

Our work on information substrates has influenced other projects in the group. For example, our work on tools to help programmers parallelize and optimize their code [22] uses coordinated views of the code: a traditional text view and a graphical polyhedric visualization (Figure 2 ). These two substrates afford different types of manipulation by the user, but share the same underlying information, i.e. the algorithm being designed. The SketchSliders technique [20] , described in the following section, provides users with an easily customizable approach to control complex visual displays. SketchSliders act as a substrate for creating slider instruments, which are independent from but tightly coupled with the visual display they control. By letting users define their own sliders, we solve the long-standing problem of combining power and simplicity. Finally, the ColorLab prototypes [17] , described in the following section, provide artists and graphic designers with substrates that offer novel ways to interact with and display color relationships.

|

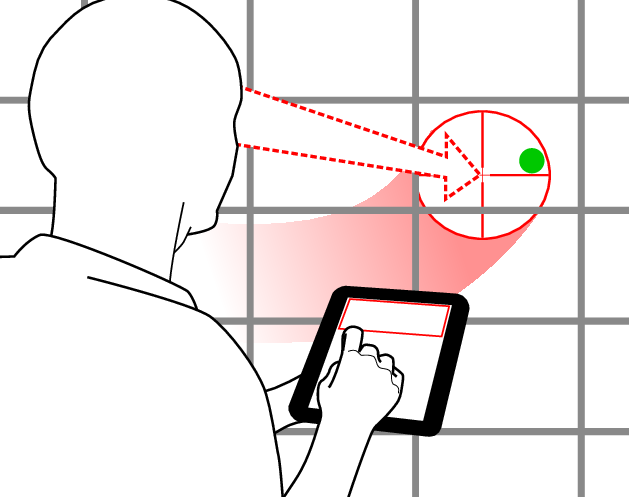

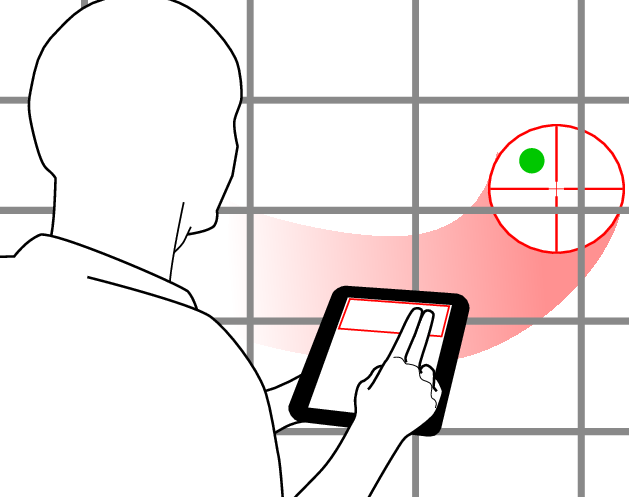

Interaction in the large – ExSitu and its predecessor InSitu have a long history of studying the most fundamental action in visual environments: pointing. We recently published an extensive 64-page journal article [11] on our studies of pointing on large, wall-sized displays. In such environments, users must be able to point from a distance, typically up to a few meters from the screen, with great accuracy. Existing techniques are ill-suited for this task, due to the combination of the high index of difficulty and the constraint that users must be able to move around in the room while pointing.

We have designed and tested a number of techniques, including dual-mode techniques that combine coarse pointing with direct techniques, such as ray-casting or using the orientation of the head, and fine pointing with relative techniques, such as using a hand-held touchpad 3 . Rather than proposing the “ultimate” pointing technique for such environments, we provide a set of criteria, a set of techniques derived from those criteria, and a calibration technique for optimizing the transfer functions used by relative pointing techniques under extreme conditions.

|

In collaboration with the Inria REVES group in Sophia Antipolis, we proposed a framework for analyzing 3D object manipulation in immersive environments [15] . We decomposed 3D object manipulation into the component movements, taking into account both physical constraints and mechanics. We then fabricated five physical devices that simulate these movements in a measurable way under experimental conditions. We implemented the devices in an immersive environment and conducted an experiment to evaluate direct finger-based against ray-based object manipulation. We identified the compromises required when designing devices that (i) are reproducible in both real and virtual settings, and (ii) can be used in experiments to measure user performance.